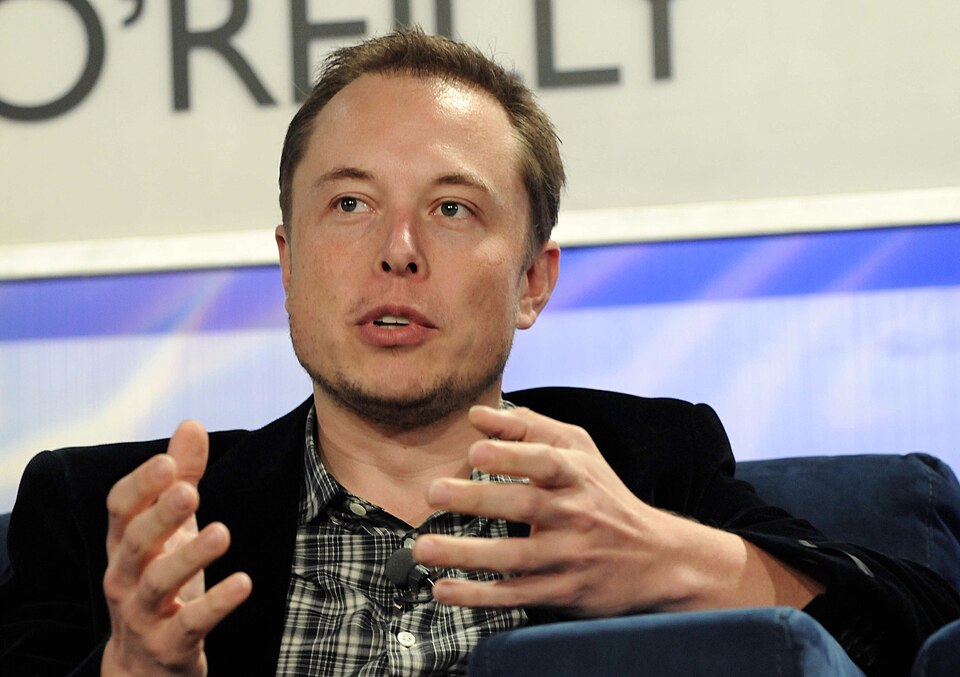

Elon Musk has publicly celebrated the success of his Grok AI app, noting its rise to the top of the UK App Store charts, even as the British government threatens to banish his platform X from the country. The threat of a ban arises from the discovery that Grok was being utilized to create non-consensual, sexually explicit images of women and children. Musk has characterized the government’s regulatory warnings as an attempt to “suppress free speech,” framing the conflict as an ideological battle rather than a safety compliance issue. This defiance has set the stage for a high-stakes confrontation between the billionaire and UK regulators.

The abuse facilitated by the Grok AI tool has been described by safety advocates as “abhorrent.” The technology allowed users to digitally “strip” women and girls in photographs, replacing their clothing with swimwear or depicting them in scenes of sexual violence, bondage, and torture. The ease with which the tool could be used to generate child sexual abuse material has alarmed experts and led to calls for immediate and drastic action. The psychological harm caused to victims of this digital abuse is significant, yet the platform’s initial response appeared to minimize the severity of the problem.

UK Technology Secretary Liz Kendall has warned that the government is “looking seriously” at blocking X if the platform fails to comply with the Online Safety Act. She stated that Ofcom is conducting an urgent investigation and is expected to take action shortly. Kendall emphasized that the government would fully support the regulator in using its powers to protect the public, including the power to block access to the service entirely. Her comments serve as a stern reminder that operating a social media platform in the UK is a privilege conditional on adherence to the law.

International condemnation has also been swift, with Australian Prime Minister Anthony Albanese labeling the use of AI for sexual exploitation as a failure of corporate responsibility. While some UK politicians have defended Musk, the overwhelming consensus among safety experts is that the platform has failed to protect its most vulnerable users. The incident has intensified the global debate on how to regulate generative AI, with many arguing that strict liability must be imposed on companies that release tools capable of such harm.

In a partial concession, X has restricted image generation for free users and blocked specific keywords related to nudity. However, the paid version of the tool remains largely unrestricted, and the broader ecosystem of “nudification” apps continues to flourish online. MPs are calling for urgent legislation to ban these tools and to hold tech companies accountable for the content they host and advertise. The demand for a total ban on such technology is growing, driven by the recognition that voluntary self-regulation by tech companies has failed to prevent abuse.